Deep learning examples¶

This notebooks contains examples with neural network models.

Table of Contents

[1]:

import torch

import random

import pandas as pd

import numpy as np

from etna.datasets.tsdataset import TSDataset

from etna.pipeline import Pipeline

from etna.transforms import DateFlagsTransform

from etna.transforms import LagTransform

from etna.transforms import LinearTrendTransform

from etna.metrics import SMAPE, MAPE, MAE

from etna.analysis import plot_backtest

from etna.models import SeasonalMovingAverageModel

import warnings

def set_seed(seed: int = 42):

"""Set random seed for reproducibility."""

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

warnings.filterwarnings("ignore")

/workdir/etna/etna/settings.py:79: UserWarning: etna[statsforecast] is not available, to install it, run `pip install etna[statsforecast]`

warnings.warn("etna[statsforecast] is not available, to install it, run `pip install etna[statsforecast]`")

1. Creating TSDataset¶

We are going to take some toy dataset. Let’s load and look at it.

[2]:

original_df = pd.read_csv("data/example_dataset.csv")

original_df.head()

[2]:

| timestamp | segment | target | |

|---|---|---|---|

| 0 | 2019-01-01 | segment_a | 170 |

| 1 | 2019-01-02 | segment_a | 243 |

| 2 | 2019-01-03 | segment_a | 267 |

| 3 | 2019-01-04 | segment_a | 287 |

| 4 | 2019-01-05 | segment_a | 279 |

Our library works with the special data structure TSDataset. Let’s create it as it was done in “Get started” notebook.

[3]:

df = TSDataset.to_dataset(original_df)

ts = TSDataset(df, freq="D")

ts.head(5)

[3]:

| segment | segment_a | segment_b | segment_c | segment_d |

|---|---|---|---|---|

| feature | target | target | target | target |

| timestamp | ||||

| 2019-01-01 | 170 | 102 | 92 | 238 |

| 2019-01-02 | 243 | 123 | 107 | 358 |

| 2019-01-03 | 267 | 130 | 103 | 366 |

| 2019-01-04 | 287 | 138 | 103 | 385 |

| 2019-01-05 | 279 | 137 | 104 | 384 |

2. Architecture¶

Our library uses PyTorch Forecasting to work with time series neural networks. There are two ways to use pytorch-forecasting models: default one and via using PytorchForecastingDatasetBuilder for using extra features.

To include extra features we use PytorchForecastingDatasetBuilder class.

Let’s look at it closer.

[4]:

from etna.models.nn.utils import PytorchForecastingDatasetBuilder

[5]:

?PytorchForecastingDatasetBuilder

Init signature:

PytorchForecastingDatasetBuilder(

max_encoder_length: int = 30,

min_encoder_length: Union[int, NoneType] = None,

min_prediction_idx: Union[int, NoneType] = None,

min_prediction_length: Union[int, NoneType] = None,

max_prediction_length: int = 1,

static_categoricals: Union[List[str], NoneType] = None,

static_reals: Union[List[str], NoneType] = None,

time_varying_known_categoricals: Union[List[str], NoneType] = None,

time_varying_known_reals: Union[List[str], NoneType] = None,

time_varying_unknown_categoricals: Union[List[str], NoneType] = None,

time_varying_unknown_reals: Union[List[str], NoneType] = None,

variable_groups: Union[Dict[str, List[int]], NoneType] = None,

constant_fill_strategy: Union[Dict[str, Union[str, float, int, bool]], NoneType] = None,

allow_missing_timesteps: bool = True,

lags: Union[Dict[str, List[int]], NoneType] = None,

add_relative_time_idx: bool = True,

add_target_scales: bool = True,

add_encoder_length: Union[bool, str] = True,

target_normalizer: Union[pytorch_forecasting.data.encoders.TorchNormalizer, pytorch_forecasting.data.encoders.NaNLabelEncoder, pytorch_forecasting.data.encoders.EncoderNormalizer, str, List[Union[pytorch_forecasting.data.encoders.TorchNormalizer, pytorch_forecasting.data.encoders.NaNLabelEncoder, pytorch_forecasting.data.encoders.EncoderNormalizer]], Tuple[Union[pytorch_forecasting.data.encoders.TorchNormalizer, pytorch_forecasting.data.encoders.NaNLabelEncoder, pytorch_forecasting.data.encoders.EncoderNormalizer]]] = 'auto',

categorical_encoders: Union[Dict[str, pytorch_forecasting.data.encoders.NaNLabelEncoder], NoneType] = None,

scalers: Union[Dict[str, Union[sklearn.preprocessing._data.StandardScaler, sklearn.preprocessing._data.RobustScaler, pytorch_forecasting.data.encoders.TorchNormalizer, pytorch_forecasting.data.encoders.EncoderNormalizer]], NoneType] = None,

)

Docstring: Builder for PytorchForecasting dataset.

Init docstring:

Init dataset builder.

Parameters here is used for initialization of :py:class:`pytorch_forecasting.data.timeseries.TimeSeriesDataSet` object.

File: /workdir/etna/etna/models/nn/utils.py

Type: type

Subclasses:

We can see a pretty scary signature, but don’t panic, we will look at the most important parameters.

time_varying_known_reals— known real values that change across the time (real regressors), now it it necessary to add “time_idx” variable to the list;time_varying_unknown_reals— our real value target, set it to["target"];max_prediction_length— our horizon for forecasting;max_encoder_length— length of past context to use;static_categoricals— static categorical values, for example, if we use multiple segments it can be some its characteristics including identifier: “segment”;time_varying_known_categoricals— known categorical values that change across the time (categorical regressors);target_normalizer— class for normalization targets across different segments.

Our library currently supports these models: * DeepAR, * TFT.

3. Testing models¶

In this section we will test our models on example.

3.1 DeepAR¶

Before training let’s fix seeds for reproducibility.

[6]:

set_seed()

Default way¶

[7]:

from etna.models.nn import DeepARModel

HORIZON = 7

model_deepar = DeepARModel(

encoder_length=HORIZON,

decoder_length=HORIZON,

trainer_params=dict(max_epochs=150, gpus=0, gradient_clip_val=0.1),

lr=0.01,

train_batch_size=64,

)

metrics = [SMAPE(), MAPE(), MAE()]

pipeline_deepar = Pipeline(model=model_deepar, horizon=HORIZON)

[8]:

metrics_deepar, forecast_deepar, fold_info_deepar = pipeline_deepar.backtest(ts, metrics=metrics, n_folds=3, n_jobs=1)

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

------------------------------------------------------------------

0 | loss | NormalDistributionLoss | 0

1 | logging_metrics | ModuleList | 0

2 | embeddings | MultiEmbedding | 0

3 | rnn | LSTM | 1.6 K

4 | distribution_projector | Linear | 22

------------------------------------------------------------------

1.6 K Trainable params

0 Non-trainable params

1.6 K Total params

0.006 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=150` reached.

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 2.2min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

------------------------------------------------------------------

0 | loss | NormalDistributionLoss | 0

1 | logging_metrics | ModuleList | 0

2 | embeddings | MultiEmbedding | 0

3 | rnn | LSTM | 1.6 K

4 | distribution_projector | Linear | 22

------------------------------------------------------------------

1.6 K Trainable params

0 Non-trainable params

1.6 K Total params

0.006 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=150` reached.

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 4.4min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

------------------------------------------------------------------

0 | loss | NormalDistributionLoss | 0

1 | logging_metrics | ModuleList | 0

2 | embeddings | MultiEmbedding | 0

3 | rnn | LSTM | 1.6 K

4 | distribution_projector | Linear | 22

------------------------------------------------------------------

1.6 K Trainable params

0 Non-trainable params

1.6 K Total params

0.006 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=150` reached.

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 6.6min

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 6.6min

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 2.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 4.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 6.2s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 6.2s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[9]:

metrics_deepar

[9]:

| segment | SMAPE | MAPE | MAE | fold_number | |

|---|---|---|---|---|---|

| 0 | segment_a | 11.457992 | 10.746771 | 58.296635 | 0 |

| 0 | segment_a | 3.176016 | 3.188000 | 16.515830 | 1 |

| 0 | segment_a | 7.292182 | 7.075445 | 38.055716 | 2 |

| 2 | segment_b | 8.014026 | 7.647106 | 20.117401 | 0 |

| 2 | segment_b | 4.404582 | 4.387299 | 10.633224 | 1 |

| 2 | segment_b | 5.720617 | 6.126173 | 13.026169 | 2 |

| 1 | segment_c | 6.136951 | 6.092212 | 10.315135 | 0 |

| 1 | segment_c | 4.311425 | 4.218124 | 7.638395 | 1 |

| 1 | segment_c | 9.405831 | 9.125025 | 16.483989 | 2 |

| 3 | segment_d | 5.807069 | 5.653955 | 50.961984 | 0 |

| 3 | segment_d | 4.531891 | 4.636113 | 36.712263 | 1 |

| 3 | segment_d | 3.950312 | 3.901861 | 31.104091 | 2 |

To summarize it we will take mean value of SMAPE metric because it is scale tolerant.

[10]:

score = metrics_deepar["SMAPE"].mean()

print(f"Average SMAPE for DeepAR: {score:.3f}")

Average SMAPE for DeepAR: 6.184

Dataset Builder: creating dataset for DeepAR with etxtra features.¶

[11]:

from pytorch_forecasting.data import GroupNormalizer

set_seed()

HORIZON = 7

transform_date = DateFlagsTransform(day_number_in_week=True, day_number_in_month=False, out_column="dateflag")

num_lags = 10

transform_lag = LagTransform(

in_column="target",

lags=[HORIZON + i for i in range(num_lags)],

out_column="target_lag",

)

lag_columns = [f"target_lag_{HORIZON+i}" for i in range(num_lags)]

dataset_builder_deepar = PytorchForecastingDatasetBuilder(

max_encoder_length=HORIZON,

max_prediction_length=HORIZON,

time_varying_known_reals=["time_idx"] + lag_columns,

time_varying_unknown_reals=["target"],

time_varying_known_categoricals=["dateflag_day_number_in_week"],

target_normalizer=GroupNormalizer(groups=["segment"]),

)

Now we are going to start backtest.

[12]:

from etna.models.nn import DeepARModel

model_deepar = DeepARModel(

dataset_builder=dataset_builder_deepar,

trainer_params=dict(max_epochs=150, gpus=0, gradient_clip_val=0.1),

lr=0.01,

train_batch_size=64,

)

metrics = [SMAPE(), MAPE(), MAE()]

pipeline_deepar = Pipeline(

model=model_deepar,

horizon=HORIZON,

transforms=[transform_lag, transform_date],

)

[13]:

metrics_deepar, forecast_deepar, fold_info_deepar = pipeline_deepar.backtest(ts, metrics=metrics, n_folds=3, n_jobs=1)

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

------------------------------------------------------------------

0 | loss | NormalDistributionLoss | 0

1 | logging_metrics | ModuleList | 0

2 | embeddings | MultiEmbedding | 35

3 | rnn | LSTM | 2.2 K

4 | distribution_projector | Linear | 22

------------------------------------------------------------------

2.3 K Trainable params

0 Non-trainable params

2.3 K Total params

0.009 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=150` reached.

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 2.9min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

------------------------------------------------------------------

0 | loss | NormalDistributionLoss | 0

1 | logging_metrics | ModuleList | 0

2 | embeddings | MultiEmbedding | 35

3 | rnn | LSTM | 2.2 K

4 | distribution_projector | Linear | 22

------------------------------------------------------------------

2.3 K Trainable params

0 Non-trainable params

2.3 K Total params

0.009 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=150` reached.

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 5.3min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

------------------------------------------------------------------

0 | loss | NormalDistributionLoss | 0

1 | logging_metrics | ModuleList | 0

2 | embeddings | MultiEmbedding | 35

3 | rnn | LSTM | 2.2 K

4 | distribution_projector | Linear | 22

------------------------------------------------------------------

2.3 K Trainable params

0 Non-trainable params

2.3 K Total params

0.009 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=150` reached.

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 7.7min

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 7.7min

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 1.9s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 3.9s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 5.9s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 5.9s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

Let’s compare results across different segments.

[14]:

metrics_deepar

[14]:

| segment | SMAPE | MAPE | MAE | fold_number | |

|---|---|---|---|---|---|

| 0 | segment_a | 6.352868 | 6.129626 | 32.770216 | 0 |

| 0 | segment_a | 3.673934 | 3.631974 | 18.586169 | 1 |

| 0 | segment_a | 4.346741 | 4.239817 | 23.106284 | 2 |

| 2 | segment_b | 6.138559 | 5.955817 | 15.267417 | 0 |

| 2 | segment_b | 3.833559 | 3.768375 | 9.465267 | 1 |

| 2 | segment_b | 3.281513 | 3.298270 | 7.616302 | 2 |

| 1 | segment_c | 5.416995 | 5.287058 | 9.203803 | 0 |

| 1 | segment_c | 5.808158 | 5.624216 | 10.211849 | 1 |

| 1 | segment_c | 5.375488 | 5.229208 | 9.724448 | 2 |

| 3 | segment_d | 5.030112 | 4.966045 | 41.805089 | 0 |

| 3 | segment_d | 4.040232 | 4.141372 | 32.495893 | 1 |

| 3 | segment_d | 3.253992 | 3.182566 | 28.029550 | 2 |

To summarize it we will take mean value of SMAPE metric because it is scale tolerant.

[15]:

score = metrics_deepar["SMAPE"].mean()

print(f"Average SMAPE for DeepAR: {score:.3f}")

Average SMAPE for DeepAR: 4.713

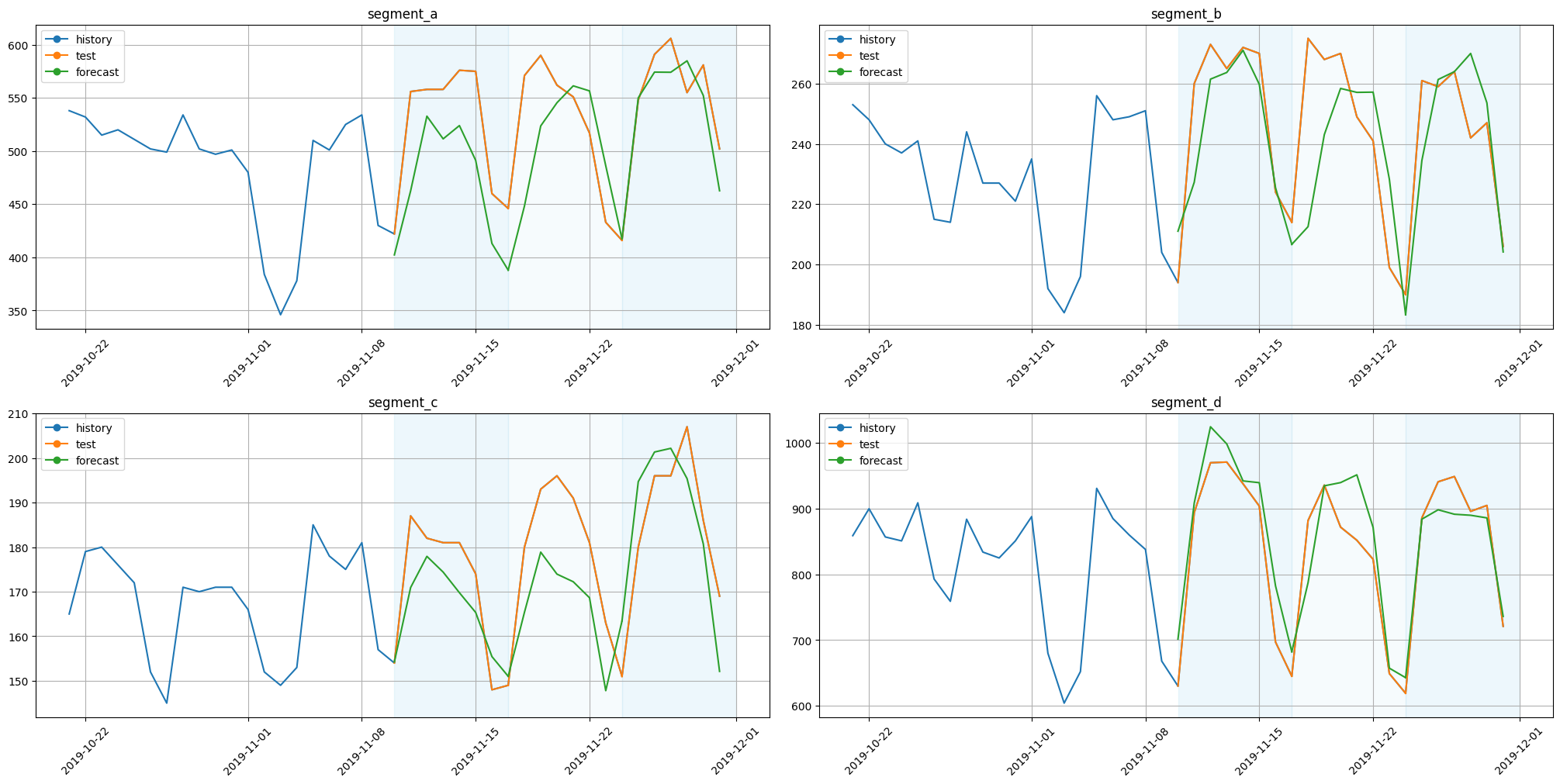

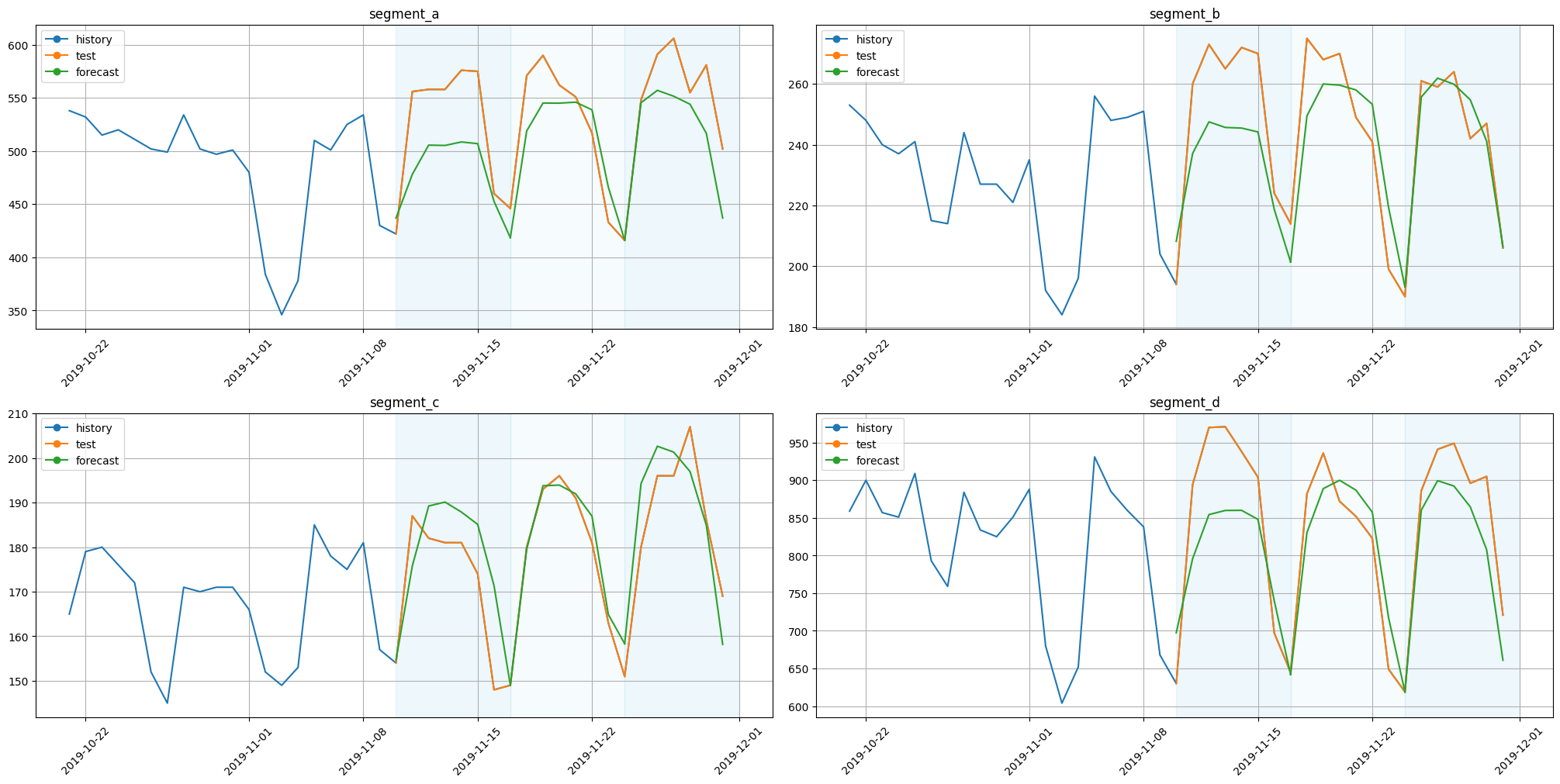

Visualize results.

[16]:

plot_backtest(forecast_deepar, ts, history_len=20)

3.2 TFT¶

Let’s move to the next model.

[17]:

set_seed()

Default way¶

[18]:

from etna.models.nn import TFTModel

model_tft = TFTModel(

encoder_length=HORIZON,

decoder_length=HORIZON,

trainer_params=dict(max_epochs=200, gpus=0, gradient_clip_val=0.1),

lr=0.01,

train_batch_size=64,

)

pipeline_tft = Pipeline(

model=model_tft,

horizon=HORIZON,

)

[19]:

metrics_tft, forecast_tft, fold_info_tft = pipeline_tft.backtest(ts, metrics=metrics, n_folds=3, n_jobs=1)

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

----------------------------------------------------------------------------------------

0 | loss | QuantileLoss | 0

1 | logging_metrics | ModuleList | 0

2 | input_embeddings | MultiEmbedding | 0

3 | prescalers | ModuleDict | 96

4 | static_variable_selection | VariableSelectionNetwork | 1.7 K

5 | encoder_variable_selection | VariableSelectionNetwork | 1.8 K

6 | decoder_variable_selection | VariableSelectionNetwork | 1.2 K

7 | static_context_variable_selection | GatedResidualNetwork | 1.1 K

8 | static_context_initial_hidden_lstm | GatedResidualNetwork | 1.1 K

9 | static_context_initial_cell_lstm | GatedResidualNetwork | 1.1 K

10 | static_context_enrichment | GatedResidualNetwork | 1.1 K

11 | lstm_encoder | LSTM | 2.2 K

12 | lstm_decoder | LSTM | 2.2 K

13 | post_lstm_gate_encoder | GatedLinearUnit | 544

14 | post_lstm_add_norm_encoder | AddNorm | 32

15 | static_enrichment | GatedResidualNetwork | 1.4 K

16 | multihead_attn | InterpretableMultiHeadAttention | 676

17 | post_attn_gate_norm | GateAddNorm | 576

18 | pos_wise_ff | GatedResidualNetwork | 1.1 K

19 | pre_output_gate_norm | GateAddNorm | 576

20 | output_layer | Linear | 119

----------------------------------------------------------------------------------------

18.4 K Trainable params

0 Non-trainable params

18.4 K Total params

0.074 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=200` reached.

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 4.3min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

----------------------------------------------------------------------------------------

0 | loss | QuantileLoss | 0

1 | logging_metrics | ModuleList | 0

2 | input_embeddings | MultiEmbedding | 0

3 | prescalers | ModuleDict | 96

4 | static_variable_selection | VariableSelectionNetwork | 1.7 K

5 | encoder_variable_selection | VariableSelectionNetwork | 1.8 K

6 | decoder_variable_selection | VariableSelectionNetwork | 1.2 K

7 | static_context_variable_selection | GatedResidualNetwork | 1.1 K

8 | static_context_initial_hidden_lstm | GatedResidualNetwork | 1.1 K

9 | static_context_initial_cell_lstm | GatedResidualNetwork | 1.1 K

10 | static_context_enrichment | GatedResidualNetwork | 1.1 K

11 | lstm_encoder | LSTM | 2.2 K

12 | lstm_decoder | LSTM | 2.2 K

13 | post_lstm_gate_encoder | GatedLinearUnit | 544

14 | post_lstm_add_norm_encoder | AddNorm | 32

15 | static_enrichment | GatedResidualNetwork | 1.4 K

16 | multihead_attn | InterpretableMultiHeadAttention | 676

17 | post_attn_gate_norm | GateAddNorm | 576

18 | pos_wise_ff | GatedResidualNetwork | 1.1 K

19 | pre_output_gate_norm | GateAddNorm | 576

20 | output_layer | Linear | 119

----------------------------------------------------------------------------------------

18.4 K Trainable params

0 Non-trainable params

18.4 K Total params

0.074 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=200` reached.

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 8.9min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

----------------------------------------------------------------------------------------

0 | loss | QuantileLoss | 0

1 | logging_metrics | ModuleList | 0

2 | input_embeddings | MultiEmbedding | 0

3 | prescalers | ModuleDict | 96

4 | static_variable_selection | VariableSelectionNetwork | 1.7 K

5 | encoder_variable_selection | VariableSelectionNetwork | 1.8 K

6 | decoder_variable_selection | VariableSelectionNetwork | 1.2 K

7 | static_context_variable_selection | GatedResidualNetwork | 1.1 K

8 | static_context_initial_hidden_lstm | GatedResidualNetwork | 1.1 K

9 | static_context_initial_cell_lstm | GatedResidualNetwork | 1.1 K

10 | static_context_enrichment | GatedResidualNetwork | 1.1 K

11 | lstm_encoder | LSTM | 2.2 K

12 | lstm_decoder | LSTM | 2.2 K

13 | post_lstm_gate_encoder | GatedLinearUnit | 544

14 | post_lstm_add_norm_encoder | AddNorm | 32

15 | static_enrichment | GatedResidualNetwork | 1.4 K

16 | multihead_attn | InterpretableMultiHeadAttention | 676

17 | post_attn_gate_norm | GateAddNorm | 576

18 | pos_wise_ff | GatedResidualNetwork | 1.1 K

19 | pre_output_gate_norm | GateAddNorm | 576

20 | output_layer | Linear | 119

----------------------------------------------------------------------------------------

18.4 K Trainable params

0 Non-trainable params

18.4 K Total params

0.074 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=200` reached.

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 13.5min

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 13.5min

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 2.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 4.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 6.2s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 6.2s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[20]:

metrics_tft

[20]:

| segment | SMAPE | MAPE | MAE | fold_number | |

|---|---|---|---|---|---|

| 0 | segment_a | 38.883908 | 32.178844 | 174.973873 | 0 |

| 0 | segment_a | 10.944611 | 10.876538 | 56.220559 | 1 |

| 0 | segment_a | 10.633444 | 10.721340 | 55.923584 | 2 |

| 2 | segment_b | 34.618967 | 43.120913 | 103.168971 | 0 |

| 2 | segment_b | 9.714098 | 10.009747 | 23.389893 | 1 |

| 2 | segment_b | 8.851617 | 9.419366 | 20.456857 | 2 |

| 1 | segment_c | 69.259289 | 106.946676 | 181.883262 | 0 |

| 1 | segment_c | 7.433419 | 7.867012 | 13.065395 | 1 |

| 1 | segment_c | 8.774654 | 9.084464 | 15.920347 | 2 |

| 3 | segment_d | 81.639359 | 57.626738 | 503.402440 | 0 |

| 3 | segment_d | 17.821837 | 16.349777 | 138.235229 | 1 |

| 3 | segment_d | 25.455609 | 22.157028 | 198.000828 | 2 |

[21]:

score = metrics_tft["SMAPE"].mean()

print(f"Average SMAPE for TFT: {score:.3f}")

Average SMAPE for TFT: 27.003

Dataset Builder¶

[22]:

set_seed()

transform_date = DateFlagsTransform(day_number_in_week=True, day_number_in_month=False, out_column="dateflag")

num_lags = 10

transform_lag = LagTransform(

in_column="target",

lags=[HORIZON + i for i in range(num_lags)],

out_column="target_lag",

)

lag_columns = [f"target_lag_{HORIZON+i}" for i in range(num_lags)]

dataset_builder_tft = PytorchForecastingDatasetBuilder(

max_encoder_length=HORIZON,

max_prediction_length=HORIZON,

time_varying_known_reals=["time_idx"],

time_varying_unknown_reals=["target"],

time_varying_known_categoricals=["dateflag_day_number_in_week"],

static_categoricals=["segment"],

target_normalizer=GroupNormalizer(groups=["segment"]),

)

[23]:

model_tft = TFTModel(

dataset_builder=dataset_builder_tft,

trainer_params=dict(max_epochs=200, gpus=0, gradient_clip_val=0.1),

lr=0.01,

train_batch_size=64,

)

pipeline_tft = Pipeline(

model=model_tft,

horizon=HORIZON,

transforms=[transform_lag, transform_date],

)

[24]:

metrics_tft, forecast_tft, fold_info_tft = pipeline_tft.backtest(ts, metrics=metrics, n_folds=3, n_jobs=1)

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

----------------------------------------------------------------------------------------

0 | loss | QuantileLoss | 0

1 | logging_metrics | ModuleList | 0

2 | input_embeddings | MultiEmbedding | 47

3 | prescalers | ModuleDict | 96

4 | static_variable_selection | VariableSelectionNetwork | 1.8 K

5 | encoder_variable_selection | VariableSelectionNetwork | 1.9 K

6 | decoder_variable_selection | VariableSelectionNetwork | 1.3 K

7 | static_context_variable_selection | GatedResidualNetwork | 1.1 K

8 | static_context_initial_hidden_lstm | GatedResidualNetwork | 1.1 K

9 | static_context_initial_cell_lstm | GatedResidualNetwork | 1.1 K

10 | static_context_enrichment | GatedResidualNetwork | 1.1 K

11 | lstm_encoder | LSTM | 2.2 K

12 | lstm_decoder | LSTM | 2.2 K

13 | post_lstm_gate_encoder | GatedLinearUnit | 544

14 | post_lstm_add_norm_encoder | AddNorm | 32

15 | static_enrichment | GatedResidualNetwork | 1.4 K

16 | multihead_attn | InterpretableMultiHeadAttention | 676

17 | post_attn_gate_norm | GateAddNorm | 576

18 | pos_wise_ff | GatedResidualNetwork | 1.1 K

19 | pre_output_gate_norm | GateAddNorm | 576

20 | output_layer | Linear | 119

----------------------------------------------------------------------------------------

18.9 K Trainable params

0 Non-trainable params

18.9 K Total params

0.075 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=200` reached.

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 4.7min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

----------------------------------------------------------------------------------------

0 | loss | QuantileLoss | 0

1 | logging_metrics | ModuleList | 0

2 | input_embeddings | MultiEmbedding | 47

3 | prescalers | ModuleDict | 96

4 | static_variable_selection | VariableSelectionNetwork | 1.8 K

5 | encoder_variable_selection | VariableSelectionNetwork | 1.9 K

6 | decoder_variable_selection | VariableSelectionNetwork | 1.3 K

7 | static_context_variable_selection | GatedResidualNetwork | 1.1 K

8 | static_context_initial_hidden_lstm | GatedResidualNetwork | 1.1 K

9 | static_context_initial_cell_lstm | GatedResidualNetwork | 1.1 K

10 | static_context_enrichment | GatedResidualNetwork | 1.1 K

11 | lstm_encoder | LSTM | 2.2 K

12 | lstm_decoder | LSTM | 2.2 K

13 | post_lstm_gate_encoder | GatedLinearUnit | 544

14 | post_lstm_add_norm_encoder | AddNorm | 32

15 | static_enrichment | GatedResidualNetwork | 1.4 K

16 | multihead_attn | InterpretableMultiHeadAttention | 676

17 | post_attn_gate_norm | GateAddNorm | 576

18 | pos_wise_ff | GatedResidualNetwork | 1.1 K

19 | pre_output_gate_norm | GateAddNorm | 576

20 | output_layer | Linear | 119

----------------------------------------------------------------------------------------

18.9 K Trainable params

0 Non-trainable params

18.9 K Total params

0.075 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=200` reached.

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 9.5min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

----------------------------------------------------------------------------------------

0 | loss | QuantileLoss | 0

1 | logging_metrics | ModuleList | 0

2 | input_embeddings | MultiEmbedding | 47

3 | prescalers | ModuleDict | 96

4 | static_variable_selection | VariableSelectionNetwork | 1.8 K

5 | encoder_variable_selection | VariableSelectionNetwork | 1.9 K

6 | decoder_variable_selection | VariableSelectionNetwork | 1.3 K

7 | static_context_variable_selection | GatedResidualNetwork | 1.1 K

8 | static_context_initial_hidden_lstm | GatedResidualNetwork | 1.1 K

9 | static_context_initial_cell_lstm | GatedResidualNetwork | 1.1 K

10 | static_context_enrichment | GatedResidualNetwork | 1.1 K

11 | lstm_encoder | LSTM | 2.2 K

12 | lstm_decoder | LSTM | 2.2 K

13 | post_lstm_gate_encoder | GatedLinearUnit | 544

14 | post_lstm_add_norm_encoder | AddNorm | 32

15 | static_enrichment | GatedResidualNetwork | 1.4 K

16 | multihead_attn | InterpretableMultiHeadAttention | 676

17 | post_attn_gate_norm | GateAddNorm | 576

18 | pos_wise_ff | GatedResidualNetwork | 1.1 K

19 | pre_output_gate_norm | GateAddNorm | 576

20 | output_layer | Linear | 119

----------------------------------------------------------------------------------------

18.9 K Trainable params

0 Non-trainable params

18.9 K Total params

0.075 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=200` reached.

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 14.5min

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 14.5min

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 2.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 4.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 6.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 6.1s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[25]:

metrics_tft

[25]:

| segment | SMAPE | MAPE | MAE | fold_number | |

|---|---|---|---|---|---|

| 0 | segment_a | 4.088854 | 3.920815 | 21.469369 | 0 |

| 0 | segment_a | 5.622753 | 5.483937 | 29.614986 | 1 |

| 0 | segment_a | 4.488632 | 4.333540 | 25.029803 | 2 |

| 2 | segment_b | 7.784580 | 7.419946 | 19.432499 | 0 |

| 2 | segment_b | 4.084682 | 4.040876 | 9.863055 | 1 |

| 2 | segment_b | 4.433536 | 4.535382 | 10.086642 | 2 |

| 1 | segment_c | 3.787958 | 3.801392 | 6.402876 | 0 |

| 1 | segment_c | 3.944129 | 3.856418 | 7.111524 | 1 |

| 1 | segment_c | 7.349261 | 7.034143 | 13.413960 | 2 |

| 3 | segment_d | 9.401569 | 9.331692 | 78.947928 | 0 |

| 3 | segment_d | 5.911709 | 6.061150 | 47.493705 | 1 |

| 3 | segment_d | 6.013355 | 5.874746 | 50.737017 | 2 |

[26]:

score = metrics_tft["SMAPE"].mean()

print(f"Average SMAPE for TFT: {score:.3f}")

Average SMAPE for TFT: 5.576

[27]:

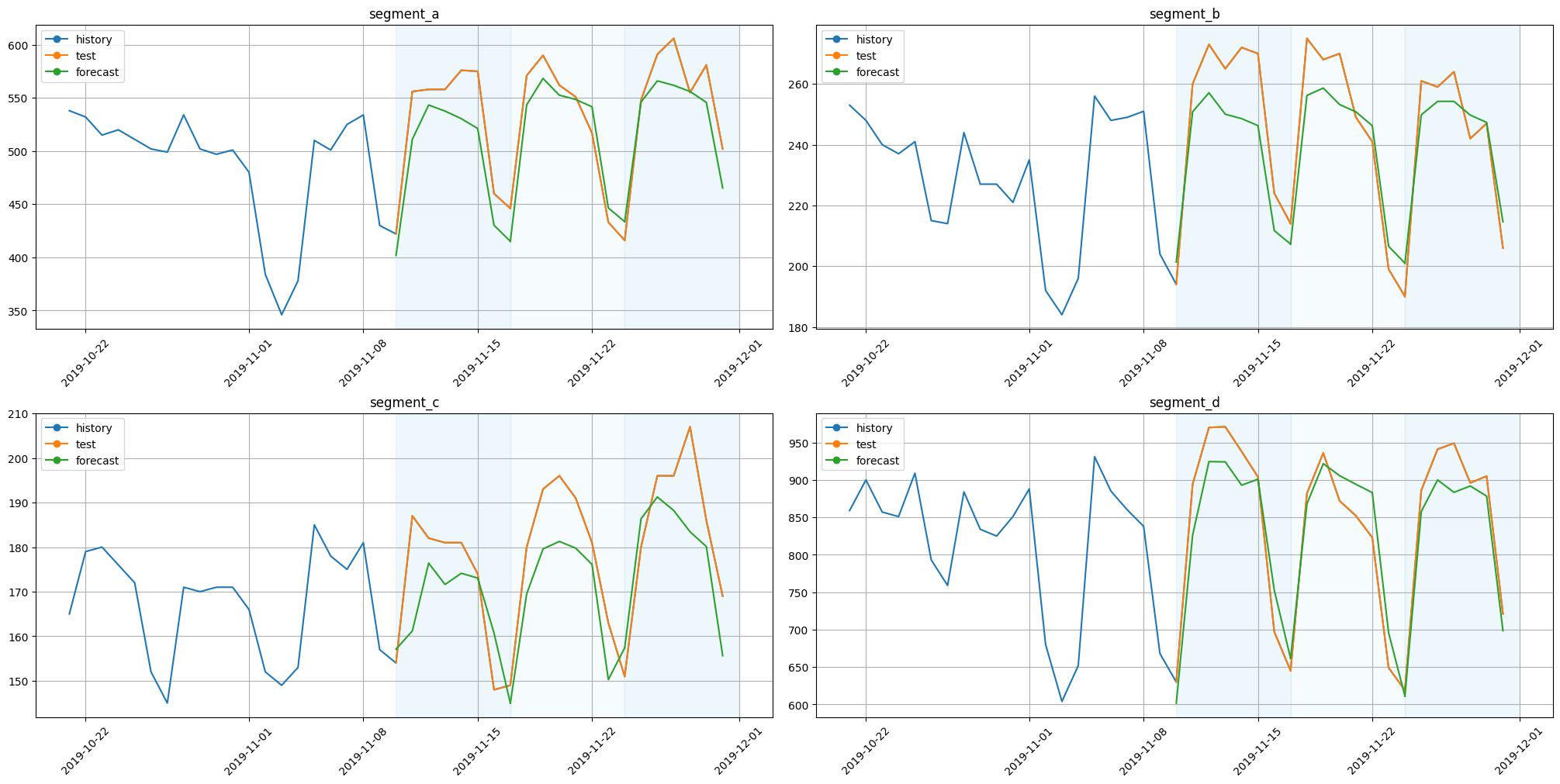

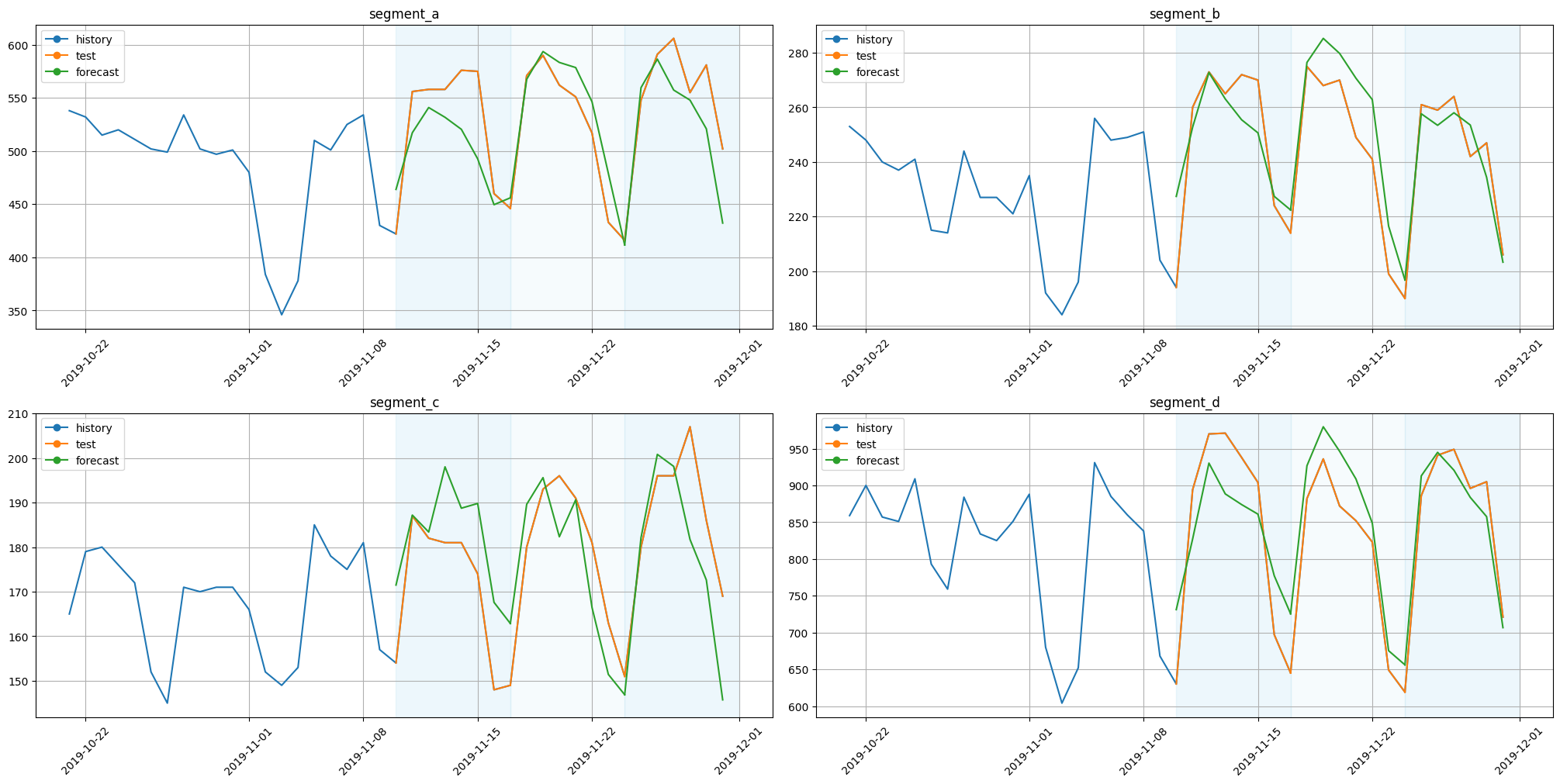

plot_backtest(forecast_tft, ts, history_len=20)

3.3 Simple model¶

For comparison let’s train a much more simpler model.

[28]:

model_sma = SeasonalMovingAverageModel(window=5, seasonality=7)

linear_trend_transform = LinearTrendTransform(in_column="target")

pipeline_sma = Pipeline(model=model_sma, horizon=HORIZON, transforms=[linear_trend_transform])

[29]:

metrics_sma, forecast_sma, fold_info_sma = pipeline_sma.backtest(ts, metrics=metrics, n_folds=3, n_jobs=1)

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.2s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.2s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[30]:

metrics_sma

[30]:

| segment | SMAPE | MAPE | MAE | fold_number | |

|---|---|---|---|---|---|

| 0 | segment_a | 6.343943 | 6.124296 | 33.196532 | 0 |

| 0 | segment_a | 5.346946 | 5.192455 | 27.938101 | 1 |

| 0 | segment_a | 7.510347 | 7.189999 | 40.028565 | 2 |

| 2 | segment_b | 7.178822 | 6.920176 | 17.818102 | 0 |

| 2 | segment_b | 5.672504 | 5.554555 | 13.719200 | 1 |

| 2 | segment_b | 3.327846 | 3.359712 | 7.680919 | 2 |

| 1 | segment_c | 6.430429 | 6.200580 | 10.877718 | 0 |

| 1 | segment_c | 5.947090 | 5.727531 | 10.701336 | 1 |

| 1 | segment_c | 6.186545 | 5.943679 | 11.359563 | 2 |

| 3 | segment_d | 4.707899 | 4.644170 | 39.918646 | 0 |

| 3 | segment_d | 5.403426 | 5.600978 | 43.047332 | 1 |

| 3 | segment_d | 2.505279 | 2.543719 | 19.347565 | 2 |

[31]:

score = metrics_sma["SMAPE"].mean()

print(f"Average SMAPE for Seasonal MA: {score:.3f}")

Average SMAPE for Seasonal MA: 5.547

[32]:

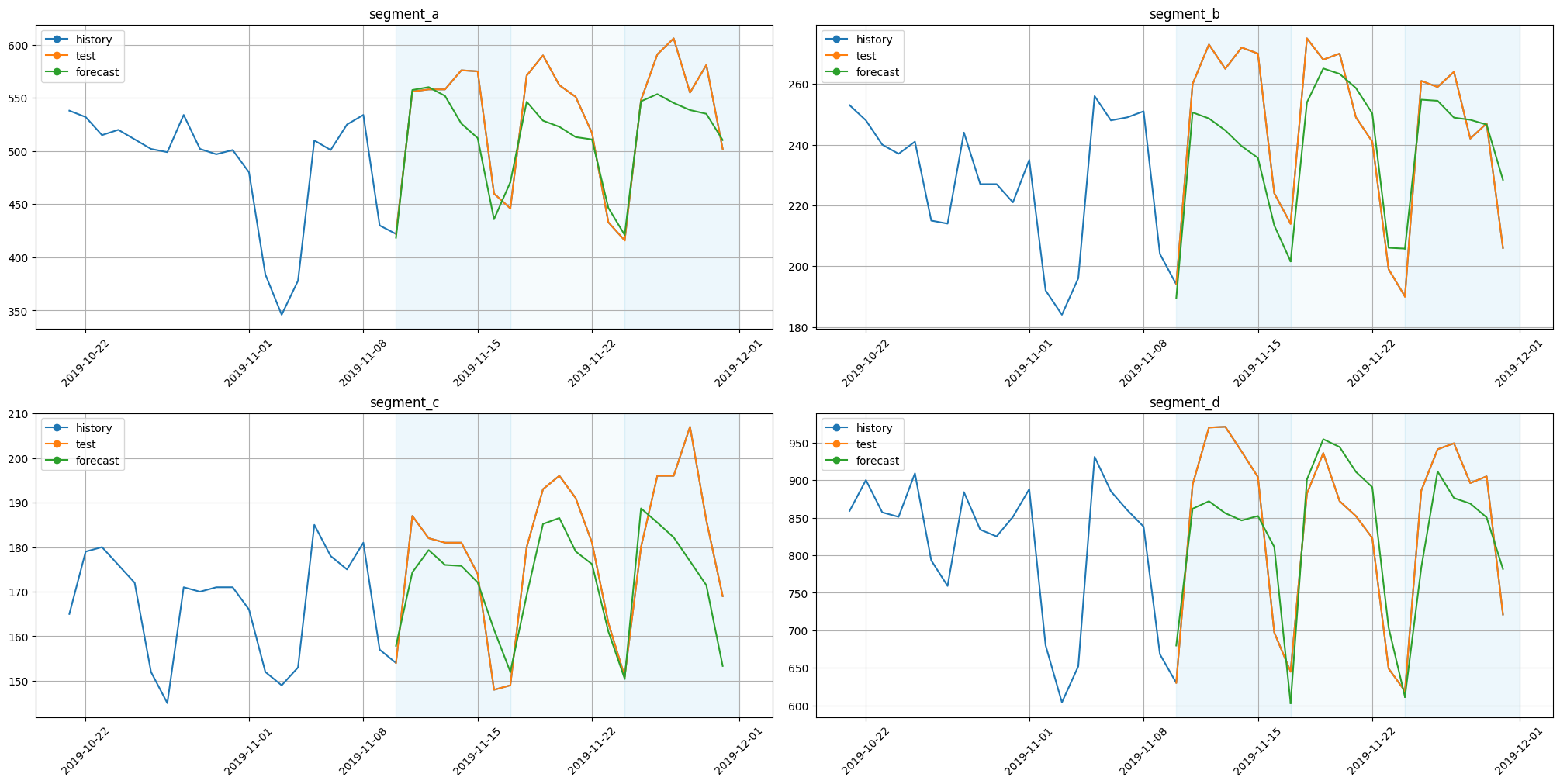

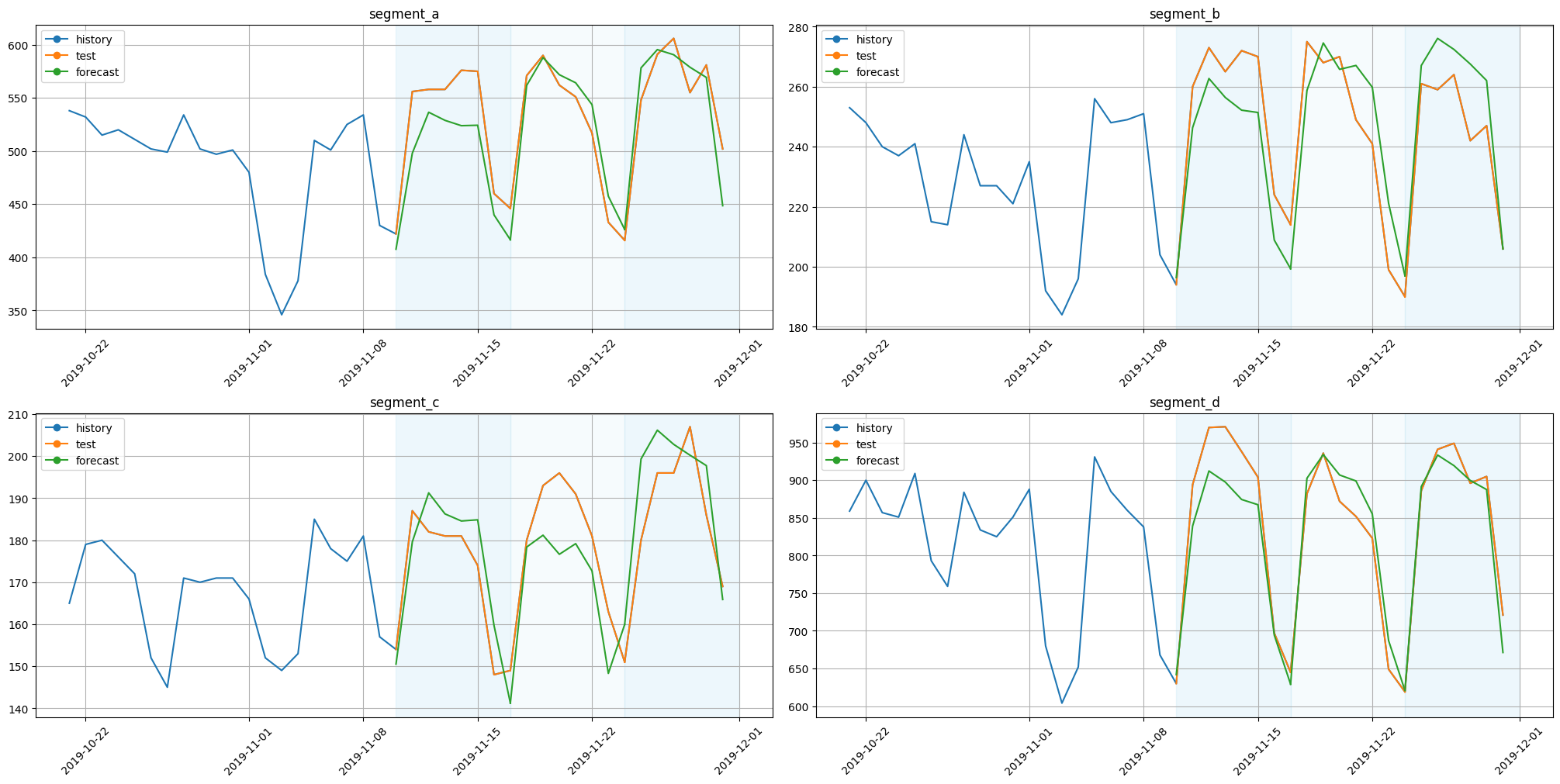

plot_backtest(forecast_sma, ts, history_len=20)

As we can see, neural networks are a bit better in this particular case.

4. Etna native deep models¶

PytorchForecastingTransform now.RNNModel¶

We’ll use RNN model based on LSTM cell

[33]:

from etna.models.nn import RNNModel

from etna.transforms import StandardScalerTransform

[34]:

model_rnn = RNNModel(

decoder_length=HORIZON,

encoder_length=2 * HORIZON,

input_size=11,

trainer_params=dict(max_epochs=5),

lr=1e-3,

)

pipeline_rnn = Pipeline(

model=model_rnn,

horizon=HORIZON,

transforms=[StandardScalerTransform(in_column="target"), transform_lag],

)

[35]:

metrics_rnn, forecast_rnn, fold_info_rnn = pipeline_rnn.backtest(ts, metrics=metrics, n_folds=3, n_jobs=1)

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

---------------------------------------

0 | loss | MSELoss | 0

1 | rnn | LSTM | 4.0 K

2 | projection | Linear | 17

---------------------------------------

4.0 K Trainable params

0 Non-trainable params

4.0 K Total params

0.016 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=5` reached.

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 5.7s

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

---------------------------------------

0 | loss | MSELoss | 0

1 | rnn | LSTM | 4.0 K

2 | projection | Linear | 17

---------------------------------------

4.0 K Trainable params

0 Non-trainable params

4.0 K Total params

0.016 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=5` reached.

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 11.3s

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

---------------------------------------

0 | loss | MSELoss | 0

1 | rnn | LSTM | 4.0 K

2 | projection | Linear | 17

---------------------------------------

4.0 K Trainable params

0 Non-trainable params

4.0 K Total params

0.016 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=5` reached.

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 17.2s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 17.2s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.2s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.2s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[36]:

score = metrics_rnn["SMAPE"].mean()

print(f"Average SMAPE for LSTM: {score:.3f}")

Average SMAPE for LSTM: 5.643

[37]:

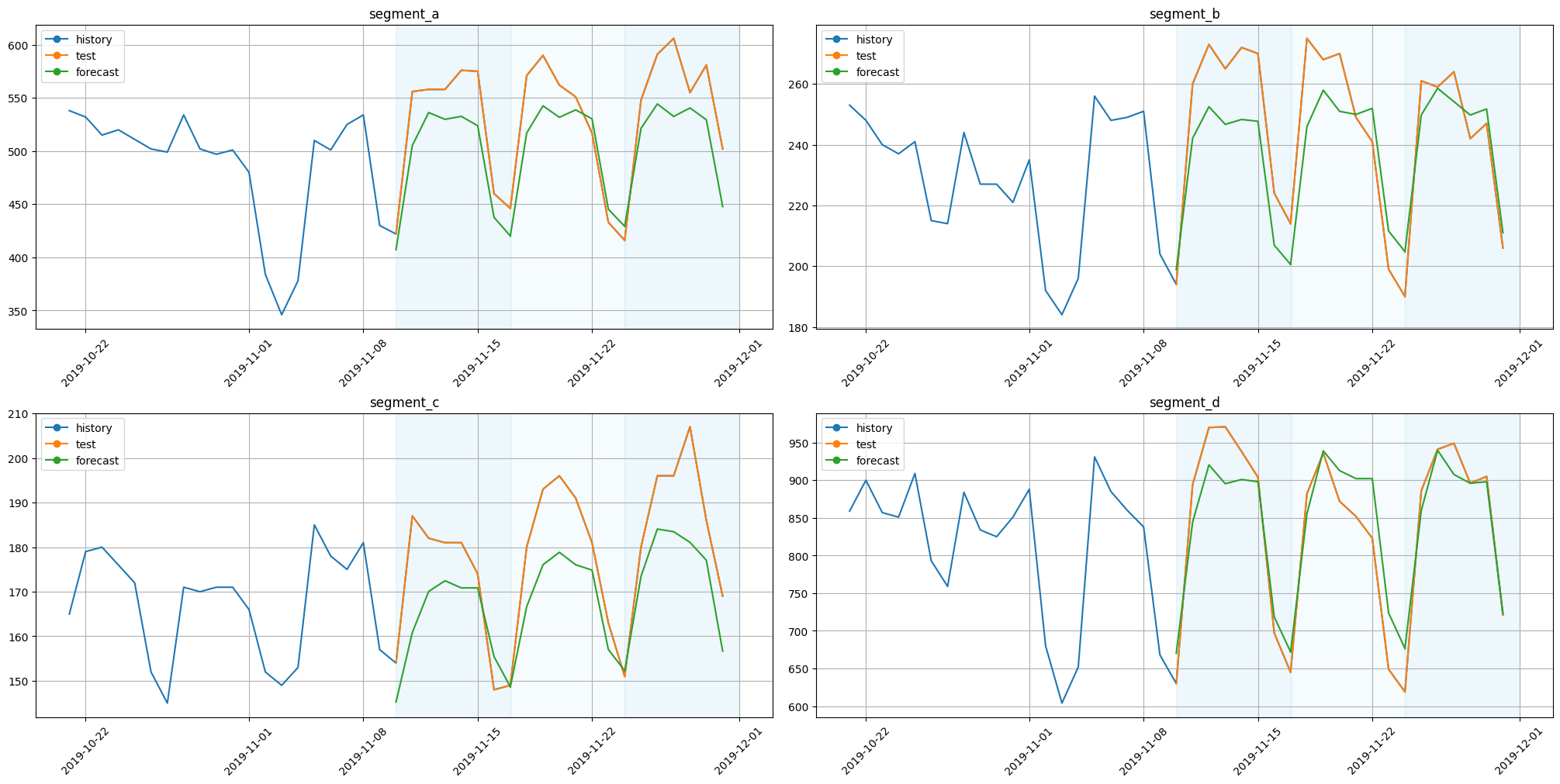

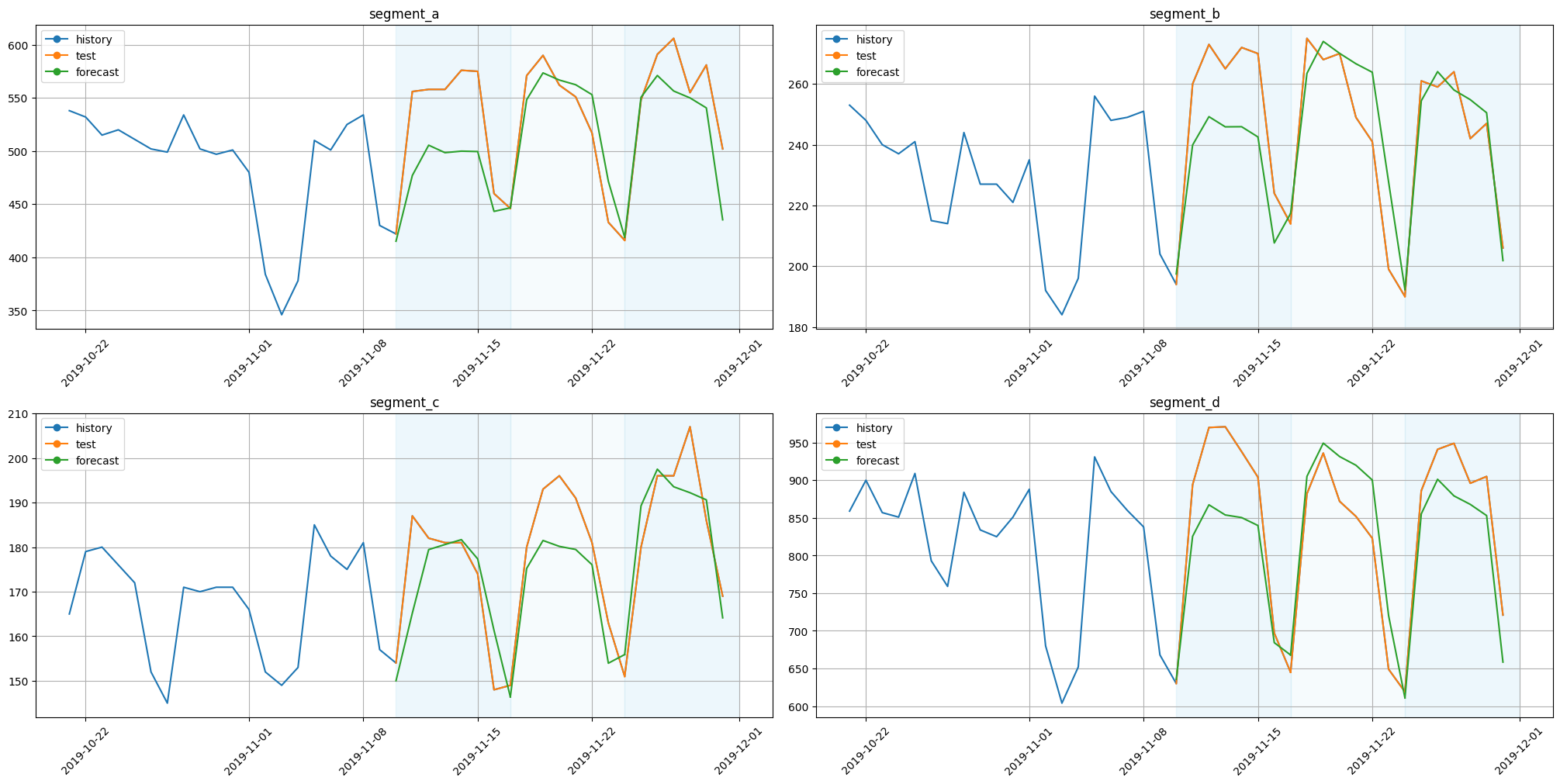

plot_backtest(forecast_rnn, ts, history_len=20)

Deep State Model¶

Deep State Model works well with multiple similar time-series. It inffers shared patterns from them.

We have to determine the type of seasonality in data (based on data granularity), SeasonalitySSM class is responsible for this. In this example, we have daily data, so we use day-of-week (7 seasons) and day-of-month (31 seasons) models. We also set the trend component using the LevelTrendSSM class. Also in the model we use time-based features like day-of-week, day-of-month and time independent feature representing the segment of time series.

[38]:

from etna.models.nn import DeepStateModel

from etna.models.nn.deepstate import CompositeSSM, SeasonalitySSM, LevelTrendSSM

from etna.transforms import StandardScalerTransform, DateFlagsTransform, SegmentEncoderTransform

[39]:

HORIZON = 7

metrics = [SMAPE(), MAPE(), MAE()]

[40]:

transforms = [

SegmentEncoderTransform(),

StandardScalerTransform(in_column="target"),

DateFlagsTransform(

day_number_in_week=True,

day_number_in_month=True,

week_number_in_month=False,

week_number_in_year=False,

month_number_in_year=False,

year_number=False,

is_weekend=False,

out_column="df",

),

]

[41]:

monthly_smm = SeasonalitySSM(num_seasons=31, timestamp_transform=lambda x: x.day - 1)

weekly_smm = SeasonalitySSM(num_seasons=7, timestamp_transform=lambda x: x.weekday())

[42]:

model_dsm = DeepStateModel(

ssm=CompositeSSM(seasonal_ssms=[weekly_smm, monthly_smm], nonseasonal_ssm=LevelTrendSSM()),

decoder_length=HORIZON,

encoder_length=2 * HORIZON,

input_size=3,

trainer_params=dict(max_epochs=5),

lr=1e-3,

)

pipeline_dsm = Pipeline(

model=model_dsm,

horizon=HORIZON,

transforms=transforms,

)

[43]:

metrics_dsm, forecast_dsm, fold_info_dsm = pipeline_dsm.backtest(ts, metrics=metrics, n_folds=3, n_jobs=1)

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

------------------------------------------

0 | RNN | LSTM | 7.2 K

1 | projectors | ModuleDict | 5.0 K

------------------------------------------

12.2 K Trainable params

0 Non-trainable params

12.2 K Total params

0.049 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=5` reached.

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 17.8s

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

------------------------------------------

0 | RNN | LSTM | 7.2 K

1 | projectors | ModuleDict | 5.0 K

------------------------------------------

12.2 K Trainable params

0 Non-trainable params

12.2 K Total params

0.049 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=5` reached.

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 32.4s

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

------------------------------------------

0 | RNN | LSTM | 7.2 K

1 | projectors | ModuleDict | 5.0 K

------------------------------------------

12.2 K Trainable params

0 Non-trainable params

12.2 K Total params

0.049 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=5` reached.

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 47.3s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 47.3s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 1.1s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 1.2s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 1.5s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 1.5s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[44]:

score = metrics_dsm["SMAPE"].mean()

print(f"Average SMAPE for DeepStateModel: {score:.3f}")

Average SMAPE for DeepStateModel: 5.523

[45]:

plot_backtest(forecast_dsm, ts, history_len=20)

N-BEATS Model¶

This architecture is based on backward and forward residual links and a deep stack of fully connected layers.

There are two types of models in the library. The NBeatsGenericModel class implements a generic deep learning model, while the NBeatsInterpretableModel is augmented with certain inductive biases to be interpretable (trend and seasonality).

[46]:

from etna.models.nn import NBeatsGenericModel

from etna.models.nn import NBeatsInterpretableModel

[47]:

HORIZON = 7

metrics = [SMAPE(), MAPE(), MAE()]

[48]:

model_nbeats_generic = NBeatsGenericModel(

input_size=2 * HORIZON,

output_size=HORIZON,

loss="smape",

stacks=30,

layers=4,

layer_size=256,

trainer_params=dict(max_epochs=1000),

lr=1e-3,

)

pipeline_nbeats_generic = Pipeline(

model=model_nbeats_generic,

horizon=HORIZON,

transforms=[],

)

[49]:

metrics_nbeats_generic, forecast_nbeats_generic, _ = pipeline_nbeats_generic.backtest(

ts, metrics=metrics, n_folds=3, n_jobs=1

)

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

--------------------------------------

0 | model | NBeats | 206 K

1 | loss | NBeatsSMAPE | 0

--------------------------------------

206 K Trainable params

0 Non-trainable params

206 K Total params

0.826 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=1000` reached.

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 4.7min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

--------------------------------------

0 | model | NBeats | 206 K

1 | loss | NBeatsSMAPE | 0

--------------------------------------

206 K Trainable params

0 Non-trainable params

206 K Total params

0.826 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=1000` reached.

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 9.2min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

--------------------------------------

0 | model | NBeats | 206 K

1 | loss | NBeatsSMAPE | 0

--------------------------------------

206 K Trainable params

0 Non-trainable params

206 K Total params

0.826 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=1000` reached.

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 13.8min

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 13.8min

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[50]:

score = metrics_nbeats_generic["SMAPE"].mean()

print(f"Average SMAPE for N-BEATS Generic: {score:.3f}")

Average SMAPE for N-BEATS Generic: 4.630

[51]:

plot_backtest(forecast_nbeats_generic, ts, history_len=20)

[52]:

model_nbeats_interp = NBeatsInterpretableModel(

input_size=4 * HORIZON,

output_size=HORIZON,

loss="smape",

trend_layer_size=64,

seasonality_layer_size=256,

trainer_params=dict(max_epochs=2000),

lr=1e-3,

)

pipeline_nbeats_interp = Pipeline(

model=model_nbeats_interp,

horizon=HORIZON,

transforms=[],

)

[53]:

metrics_nbeats_interp, forecast_nbeats_interp, _ = pipeline_nbeats_interp.backtest(

ts, metrics=metrics, n_folds=3, n_jobs=1

)

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

--------------------------------------

0 | model | NBeats | 224 K

1 | loss | NBeatsSMAPE | 0

--------------------------------------

223 K Trainable params

385 Non-trainable params

224 K Total params

0.896 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=2000` reached.

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 3.4min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

--------------------------------------

0 | model | NBeats | 224 K

1 | loss | NBeatsSMAPE | 0

--------------------------------------

223 K Trainable params

385 Non-trainable params

224 K Total params

0.896 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=2000` reached.

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 7.4min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

--------------------------------------

0 | model | NBeats | 224 K

1 | loss | NBeatsSMAPE | 0

--------------------------------------

223 K Trainable params

385 Non-trainable params

224 K Total params

0.896 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=2000` reached.

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 11.0min

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 11.0min

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[54]:

score = metrics_nbeats_interp["SMAPE"].mean()

print(f"Average SMAPE for N-BEATS Interpretable: {score:.3f}")

Average SMAPE for N-BEATS Interpretable: 5.375

[55]:

plot_backtest(forecast_nbeats_interp, ts, history_len=20)

PatchTS Model¶

Model with transformer encoder that uses patches of timeseries as input words and linear decoder.

[56]:

from etna.models.nn import PatchTSModel

model_patchts = PatchTSModel(

decoder_length=HORIZON,

encoder_length=2 * HORIZON,

patch_len=1,

trainer_params=dict(max_epochs=100),

lr=1e-3,

)

pipeline_patchts = Pipeline(

model=model_patchts, horizon=HORIZON, transforms=[StandardScalerTransform(in_column="target")]

)

metrics_patchts, forecast_patchts, fold_info_patchs = pipeline_patchts.backtest(

ts, metrics=metrics, n_folds=3, n_jobs=1

)

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

------------------------------------------

0 | loss | MSELoss | 0

1 | model | Sequential | 397 K

2 | projection | Sequential | 1.8 K

------------------------------------------

399 K Trainable params

0 Non-trainable params

399 K Total params

1.598 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=100` reached.

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 5.0min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

------------------------------------------

0 | loss | MSELoss | 0

1 | model | Sequential | 397 K

2 | projection | Sequential | 1.8 K

------------------------------------------

399 K Trainable params

0 Non-trainable params

399 K Total params

1.598 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=100` reached.

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 12.3min

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

| Name | Type | Params

------------------------------------------

0 | loss | MSELoss | 0

1 | model | Sequential | 397 K

2 | projection | Sequential | 1.8 K

------------------------------------------

399 K Trainable params

0 Non-trainable params

399 K Total params

1.598 Total estimated model params size (MB)

`Trainer.fit` stopped: `max_epochs=100` reached.

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 20.1min

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 20.1min

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.2s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.2s

[Parallel(n_jobs=1)]: Done 1 tasks | elapsed: 0.0s

[Parallel(n_jobs=1)]: Done 2 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[Parallel(n_jobs=1)]: Done 3 tasks | elapsed: 0.1s

[57]:

score = metrics_patchts["SMAPE"].mean()

print(f"Average SMAPE for PatchTS: {score:.3f}")

Average SMAPE for PatchTS: 6.297

[58]:

plot_backtest(forecast_patchts, ts, history_len=20)